Module tsflex.features

Feature extraction submodule.

Feature extraction guide

The following sections will explain the feature extraction module in detail.

Working example ✅

tsflex is built to be intuitive, so we encourage you to copy-paste this code and toy with some parameters!

This executable example creates a feature-collection that contains 2 features (skewness and minimum). Note that tsflex does not make any assumptions about the sampling rate of the time-series data.

import pandas as pd; import scipy.stats as ss; import numpy as np

from tsflex.features import FeatureDescriptor, FeatureCollection, FuncWrapper

# 1. -------- Get your time-indexed data --------

# Data contains 1 column; ["TMP"]

url = "https://github.com/predict-idlab/tsflex/raw/main/examples/data/empatica/"

data = pd.read_parquet(url + "tmp.parquet").set_index("timestamp")

# 2 -------- Construct your feature collection --------

fc = FeatureCollection(

feature_descriptors=[

FeatureDescriptor(

function=FuncWrapper(func=ss.skew, output_names="skew"),

series_name="TMP",

window="5min", stride="2.5min",

)

]

)

# -- 2.1. Add features to your feature collection

# NOTE: tsflex allows features to have different windows and strides

fc.add(FeatureDescriptor(np.min, "TMP", '2.5min', '2.5min'))

# 3 -------- Calculate features --------

fc.calculate(data=data, return_df=True) # which outputs:

| timestamp | TMP__amin__w=1m_s=30s | TMP__skew__w=2m_s=1m |

|---|---|---|

| 2017-06-13 14:23:13+02:00 | 27.37 | nan |

| 2017-06-13 14:23:43+02:00 | 27.37 | nan |

| 2017-06-13 14:24:13+02:00 | 27.43 | 10.8159 |

| 2017-06-13 14:24:43+02:00 | 27.81 | nan |

| 2017-06-13 14:25:13+02:00 | 28.23 | -0.0327893 |

| … | … | … |

Tip

More advanced feature-extraction examples can be found in these example notebooks

Getting started 🚀

The feature-extraction functionality of tsflex is provided by a FeatureCollection that contains FeatureDescriptors. The features are calculated (in a parallel manner) on the data that is passed to the feature collection.

Components

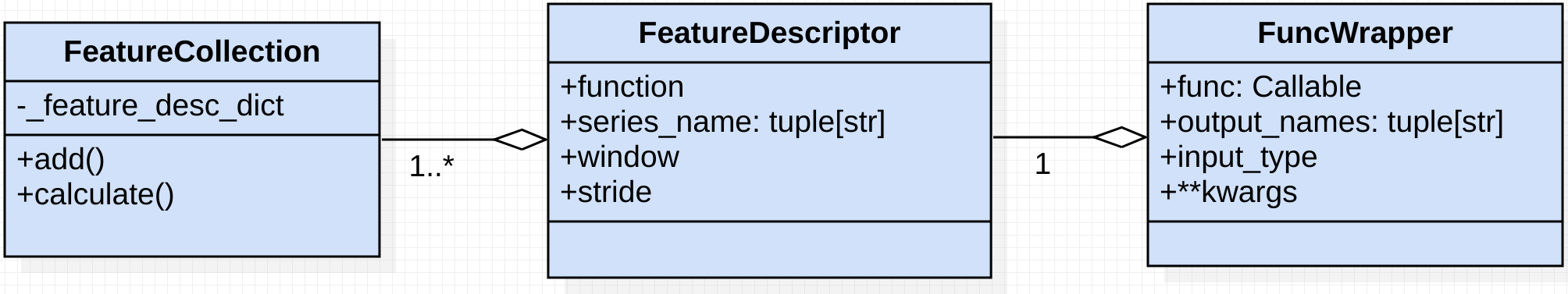

As shown above, there are 3 relevant classes for feature-extraction.

- FeatureCollection: serves as a registry, withholding the to-be-calculated features

- FeatureDescriptor: an instance of this class describes a feature.

Features are defined by:series_name: the names of the input series on which the feature-function will operatefunction: the Callable feature-function - e.g. np.meanwindow: the sample or time-based window - e.g. 200 or "2days"stride: the sample or time-based stride - e.g. 15 or "1hour"

- FuncWrapper: wraps Callable feature functions, and is intended for feature function configuration.

FuncWrappers are defined by:func: The wrapped feature-functionoutput_names: set custom and/or multiple feature output namesinput_type: define the feature its datatype; e.g., a pd.Series or np.array- **kwargs: additional keyword argument which are passed to

func

The snippet below shows how the FeatureCollection & FeatureDescriptor components work together:

import numpy as np; import scipy.stats as ss

from tsflex.features import FeatureDescriptor, FeatureCollection

# The FeatureCollection takes a List[FeatureDescriptor] as input

# There is no need for using a FuncWrapper when dealing with simple feature functions

fc = FeatureCollection(feature_descriptors=[

FeatureDescriptor(np.mean, "series_a", "1hour", "15min"),

FeatureDescriptor(ss.skew, "series_b", "3hours", "5min")

]

)

# We can still add features after instantiating.

fc.add(features=[FeatureDescriptor(np.std, "series_a", "1hour", "15min")])

# Calculate the features

fc.calculate(...)

Feature functions

A feature function needs to match this prototype:

function(*series: Union[np.ndarray, pd.Series], **kwargs)

-> Union[Any, List[Any]]

Hence, feature functions should take one (or multiple) arrays as first input. This can be followed by some keyword arguments.

The output of a feature function can be rather versatile (e.g., a float, integer, string, bool, … or a list thereof).

Note that the feature function may also take more than one series as input. In this case, the feature function should be wrapped in a FuncWrapper, with the input_type argument set to pd.Series.

In the advanced usage section, more info is given on these feature-function.

Multiple feature descriptors

Sometimes it can get overly verbose when the same feature is shared over multiple series, windows and/or strides. To solve this problem, we introduce the MultipleFeatureDescriptors. This component allows to create multiple feature descriptors for all the function - series_name(s) - window - stride combinations.

As shown in the example below, a MultipleFeatureDescriptors instance can be added a FeatureCollection.

import numpy as np; import scipy.stats as ss

from tsflex.features import FeatureDescriptor, FeatureCollection

from tsflex.features import MultipleFeatureDescriptors

# There is no need for using a FuncWrapper when dealing with simple feature functions

fc = FeatureCollection(feature_descriptors=[

FeatureDescriptor(np.mean, "series_a", "1hour", "15min"),

FeatureDescriptor(ss.skew, "series_b", "3hours", "5min"),

# Expands to a feature-descriptor list, withholding the combination of all

# The feature-window-stride arguments above.

MultipleFeatureDescriptors(

functions=[np.min, np.max, np.std, ss.skew],

series_names=["series_a", "series_b", "series_c"],

windows=["5min", "15min"],

strides=["1min","2min","3min"]

)

]

)

# Calculate the features

fc.calculate(...)

Output format

The output of the FeatureCollection its .calculate() is a (list of) sequence-indexed pd.DataFrames with column names:

<SERIES-NAME>__<FEAT-NAME>__w=<WINDOW>__s=<STRIDE>.

The column-name for the first feature defined in the snippet above will thus be series_a__std__w=1h__s=15m.

When the windows and strides are defined in a sample based-manner (which is mandatory for non datetime-indexed data), a possible output column would be series_a__std__w=100_s=15, where the window and stride are defined in samples and thus not in time-strings.

Note

You can find more information about the input data-formats in this section and read more about the (obvious) limitations in the next section.

Limitations ⚠️

It is important to note that there a still some, albeit logical, limitations regarding the supported data format.

These limitations are:

- Each

tsmust be in the flat/wide data format and they all need to have the same sequence index dtype, which needs to be sortable.- It just doesn't make sense to have a mix of different sequence index dtypes.

Imagine a FeatureCollection to which atswith apd.DatetimeIndexis passed, but atswith apd.RangeIndexis also passed. Both indexes aren't comparable, which thus is counterintuitive.

- It just doesn't make sense to have a mix of different sequence index dtypes.

- tsflex has no support for multi-indexes & multi-columns

- tsflex assumes that each

tshas a unique name. Hence no duplicatetsnames are allowed- Countermeasure: rename your

ts

- Countermeasure: rename your

Important notes 📢

We support various data-types. e.g. (np.float32, string-data, time-based data). However, it is the end-users responsibility to use a function which interplays nicely with the data its format.

Advanced usage 👀

Also take a look at the .reduce() and .serialize() methods.

Versatile functions

As explained above tsflex is rather versatile in terms of function input and output.

tsflex does not just allow one-to-one processing functions, but also many-to-one, one-to-many, and many-to-many functions are supported in a convenient way:

-

many-to-one; the feature function should- take multiple series as input

- output a single value

The function should (usually) not be wrapped in a

FuncWrapper.

Note that now theseries_nameargument requires a tuple of the ordered input series names.Example:

def abs_sum_diff(s1: np.array, s2: np.array) -> float:

min_len = min(len(s1), len(s2))

return np.sum(np.abs(s1[:min_len] - s2[:min_len]))

fd = FeatureDescriptor(

abs_sum_diff, series_name=("series_1", "series_2"),

window="5m", stride="2m30s",

)

-

one-to-many; the feature function should- take a single series as input

- output multiple values

The function should be wrapped in a

FuncWrapperto log its multiple output names.Example:

def abs_stats(s: np.array) -> Tuple[float]:

s_abs = np.abs(s)

return np.min(s_abs), np.max(s_abs), np.mean(s_abs), np.std(s_abs)

output_names = ["abs_min", "abs_max", "abs_mean", "abs_std"]

fd = FeatureDescriptor(

FuncWrapper(abs_stats, output_names=output_names),

series_name="series_1", window="5m", stride="2m30s",

)

-

many-to-many; the feature function should- take multiple series as input

- output multiple values

The function should be wrapped in a

FuncWrapperto log its multiple output names.Example:

def abs_stats_diff(s1: np.array, s2: np.array) -> Tuple[float]:

min_len = min(len(s1), len(s2))

s_abs_diff = np.sum(np.abs(s1[:min_len] - s2[:min_len]))

return np.min(s_abs_diff), np.max(s_abs_diff), np.mean(s_abs_diff)

output_names = ["abs_diff_min", "abs_diff_max", "abs_diff_mean"]

fd = FeatureDescriptor(

FuncWrapper(abs_stats_diff, output_names=output_names),

series_name=("series_1", "series_2"), window="5m", stride="2m30s",

)

Note

As visible in the feature function prototype, both np.array and pd.Series are supported function input types.

If your feature function requires pd.Series as input (instead of the default np.array), the function should be wrapped in a FuncWrapper with the input_type argument set to pd.Series.

An example of a function that leverages the pd.Series datatype:

def linear_trend_timewise(s: pd.Series):

# Get differences between each timestamp and the first timestamp in hour float

# Then convert to hours and reshape for linear regression

times_hours = np.asarray((s.index - s.index[0]).total_seconds() / 3600)

linReg = linregress(times_hours, s.values)

return linReg.slope, linReg.intercept, linReg.rvalue

fd = FeatureDescriptor(

FuncWrapper(

linear_trend_timewise,

["twise_regr_slope", "twise_regr_intercept", "twise_regr_r_value"],

input_type=pd.Series,

),

)

Multivariate-data

There are no assumptions made about the data its sequence-ranges. However, the end-user must take some things in consideration.

- By using the

bound_methodargument of.calculate(), the end-user can specify whether the "inner" or "outer" data-bounds will be used for generating the slice-ranges. - All

tsmust-have the same data-index dtype. this makes them comparable and allows for generating same-range slices on multivariate data.

Irregularly sampled data

Strided-rolling feature extraction on irregularly sampled data results in varying feature-segment sizes.

When using multivariate data, with either different sample rates or with an irregular data-rate, you cannot make the assumption that all windows will have the same length. Your feature extraction method should thus be:

- robust for varying length windows

- robust for (possible) empty windows

Tip

For conveniently creating such robust features we suggest using the make_robust function.

Note

A warning will be raised when irregular sampled data is observed.

In order to avoid this warning, the user should explicitly approve that there may be sparsity in the data by setting the approve_sparsity flag to True in the .calculate() method.

Logging

When a logging_file_path is passed to the FeatureCollection its .calculate() method, the execution times of the feature functions will be logged.

This is especially useful to identify which feature functions take a long time to compute.

Expand source code

"""Feature extraction submodule.

.. include:: ../../docs/pdoc_include/features.md

"""

__author__ = "Jonas Van Der Donckt, Jeroen Van Der Donckt, Emiel Deprost"

from .. import __pdoc__

from .feature import FeatureDescriptor, MultipleFeatureDescriptors

from .feature_collection import FeatureCollection

from .function_wrapper import FuncWrapper

from .logger import get_feature_logs, get_function_stats, get_series_names_stats

from .segmenter import StridedRollingFactory

__pdoc__["FuncWrapper.__call__"] = True

__all__ = [

"FeatureDescriptor",

"MultipleFeatureDescriptors",

"FeatureCollection",

"FuncWrapper",

"StridedRollingFactory",

"get_feature_logs",

"get_function_stats",

"get_series_names_stats",

]API reference of tsflex.features

.feature-

FeatureDescriptor and MultipleFeatureDescriptors class for creating time-series features.

.feature_collection-

FeatureCollection class for bookkeeping and calculation of time-series features …

.function_wrapper-

FuncWrapper class for object-oriented representation of a function.

.integrations-

Wrappers for seamless integration of feature functions from other packages.

.logger-

Contains the used variables and functions to provide logging functionality …

.segmenter-

Series segmentation submodule.

.utils-

Utility functions for more convenient feature extraction.

Functions

def get_feature_logs(logging_file_path)-

Expand source code

def get_feature_logs(logging_file_path: str) -> pd.DataFrame: """Get execution (time) info for each feature of a `FeatureCollection`. Parameters ---------- logging_file_path: str The file path where the logged messages are stored. This is the file path that is passed to the `FeatureCollection` its `calculate` method. Returns ------- pd.DataFrame A DataFrame with the features its function, input series names and (%) calculation duration. """ df = _parse_logging_execution_to_df(logging_file_path) df["duration"] = pd.to_timedelta(df["duration"], unit="s") return dfGet execution (time) info for each feature of a

FeatureCollection.Parameters

logging_file_path:str- The file path where the logged messages are stored. This is the file path that

is passed to the

FeatureCollectionitscalculatemethod.

Returns

pd.DataFrame- A DataFrame with the features its function, input series names and (%) calculation duration.

def get_function_stats(logging_file_path)-

Expand source code

def get_function_stats(logging_file_path: str) -> pd.DataFrame: """Get execution (time) statistics for each function. Parameters ---------- logging_file_path: str The file path where the logged messages are stored. This is the file path that is passed to the `FeatureCollection` its `calculate` method. Returns ------- pd.DataFrame A DataFrame with for each function (i.e., `function-(window,stride)`) combination the mean (time), std (time), sum (time), sum (% time), mean (% time),and number of executions. """ df = _parse_logging_execution_to_df(logging_file_path) # Get the sorted functions in a list to use as key for sorting the groups sorted_funcs = ( df.groupby(["function"]) .agg({"duration": ["mean"]}) .sort_values(by=("duration", "mean"), ascending=True) .index.to_list() ) def key_func(idx_level): # type: ignore[no-untyped-def] if all(idx in sorted_funcs for idx in idx_level): return [sorted_funcs.index(idx) for idx in idx_level] return idx_level return ( df.groupby(["function", "window", "stride"]) .agg( { "duration": ["sum", "mean", "std", "count"], "duration %": ["sum", "mean"], } ) .sort_index(key=key_func, ascending=False) )Get execution (time) statistics for each function.

Parameters

logging_file_path:str- The file path where the logged messages are stored. This is the file path that

is passed to the

FeatureCollectionitscalculatemethod.

Returns

pd.DataFrame- A DataFrame with for each function (i.e.,

function-(window,stride)) combination the mean (time), std (time), sum (time), sum (% time), mean (% time),and number of executions.

def get_series_names_stats(logging_file_path)-

Expand source code

def get_series_names_stats(logging_file_path: str) -> pd.DataFrame: """Get execution (time) statistics for each `key-(window,stride)` combination. Parameters ---------- logging_file_path: str The file path where the logged messages are stored. This is the file path that is passed to the `FeatureCollection` its `calculate` method. Returns ------- pd.DataFrame A DataFrame with for each function the mean (time), std (time), sum (time), sum (% time), mean (% time), and number of executions. """ df = _parse_logging_execution_to_df(logging_file_path) return ( df.groupby(["series_names", "window", "stride"]) .agg( { "duration": ["sum", "mean", "std", "count"], "duration %": ["sum", "mean"], } ) .sort_values(by=("duration", "sum"), ascending=False) )Get execution (time) statistics for each

key-(window,stride)combination.Parameters

logging_file_path:str- The file path where the logged messages are stored. This is the file path that

is passed to the

FeatureCollectionitscalculatemethod.

Returns

pd.DataFrame- A DataFrame with for each function the mean (time), std (time), sum (time), sum (% time), mean (% time), and number of executions.

Classes

class FeatureDescriptor (function, series_name, window=None, stride=None)-

Expand source code

class FeatureDescriptor(FrozenClass): """A FeatureDescriptor object, containing all feature information. Parameters ---------- function : Union[FuncWrapper, Callable] The function that calculates the feature(s). The prototype of the function should match: \n function(*series: Union[np.array, pd.Series]) -> Union[Any, List[Any]] Note that when the input type is ``pd.Series``, the function should be wrapped in a `FuncWrapper` with `input_type` = ``pd.Series``. series_name : Union[str, Tuple[str, ...]] The names of the series on which the feature function should be applied. This argument should match the `function` its input; \n * If `series_name` is a string (or tuple of a single string), then `function` should require just one series as input. * If `series_name` is a tuple of strings, then `function` should require `len(tuple)` series as input **and in exactly the same order** window : Union[float, str, pd.Timedelta], optional The window size. By default None. This argument supports multiple types: \n * If None, the `segment_start_idxs` and `segment_end_idxs` will need to be passed. * If the type is an `float` or an `int`, its value represents the series - its window **range** when a **non time-indexed** series is passed. - its window in **number of samples**, when a **time-indexed** series is passed (must then be and `int`) * If the window's type is a `pd.Timedelta`, the window size represents the window-time-range. The passed data **must have a time-index**. * If a `str`, it must represents a window-time-range-string. The **passed data must have a time-index**. .. Note:: - When the `segment_start_idxs` and `segment_end_idxs` are both passed to the `FeatureCollection.calculate` method, this window argument is ignored. Note that this is the only case when it is allowed to pass None for the window argument. - When the window argument is None, than the stride argument should be None as well (as it makes no sense to pass a stride value when the window is None). stride : Union[float, str, pd.Timedelta, List[Union[float, str, pd.Timedelta]]], optional The stride size(s). By default None. This argument supports multiple types: \n * If None, the stride will need to be passed to `FeatureCollection.calculate`. * If the type is an `float` or an `int`, its value represents the series - its stride **range** when a **non time-indexed** series is passed. - the stride in **number of samples**, when a **time-indexed** series is passed (must then be and `int`) * If the stride's type is a `pd.Timedelta`, the stride size represents the stride-time delta. The passed data **must have a time-index**. * If a `str`, it must represent a stride-time-delta-string. The **passed data must have a time-index**. \n * If a `List[Union[float, str, pd.Timedelta]]`, then the set intersection,of the strides will be used (e.g., stride=[2,3] -> index: 0, 2, 3, 4, 6, 8, 9, ...) .. Note:: - The stride argument of `FeatureCollection.calculate` takes precedence over this value when set (i.e., not None value for `stride` passed to the `calculate` method). - The stride argument should be None when the window argument is None (as it makes no sense to pass a stride value when the window is None). .. Note:: As described above, the `window-stride` argument can be sample-based (when using time-index series and int based arguments), but we do **not encourage** using this for `time-indexed` sequences. As we make the implicit assumption that the time-based data is sampled at a fixed frequency So only, if you're 100% sure that this is correct, you can safely use such arguments. Notes ----- * The `window` and `stride` argument should be either **both** numeric or ``pd.Timedelta`` (depending on de index datatype) - when `stride` is not None. * For each `function` - `input`(-series) - `window` - stride combination, one needs to create a distinct `FeatureDescriptor`. Hence it is more convenient to create a `MultipleFeatureDescriptors` when `function` - `window` - `stride` **combinations** should be applied on various input-series (combinations). * When `function` takes **multiple series** (i.e., arguments) as **input**, these are joined (based on the index) before applying the function. If the indexes of these series are not exactly the same, it might occur that not all series have exactly the same length! Hence, make sure that the `function` can deal with this! * For more information about the str-based time args, look into: [pandas time delta](https://pandas.pydata.org/pandas-docs/stable/user_guide/timedeltas.html#parsing){:target="_blank"} Raises ------ TypeError * Raised when the `function` is not an instance of Callable or FuncWrapper. * Raised when `window` and `stride` are not of exactly the same type (when `stride` is not None). See Also -------- StridedRolling: As the window-stride sequence conversion takes place there. """ def __init__( self, function: Union[FuncWrapper, Callable], series_name: Union[str, Tuple[str, ...]], window: Optional[Union[float, str, pd.Timedelta]] = None, stride: Optional[ Union[float, str, pd.Timedelta, List[Union[float, str, pd.Timedelta]]] ] = None, ): strides = sorted(set(to_list(stride))) # omit duplicate stride values if window is None: assert strides == [None], "stride must be None if window is None" self.series_name: Tuple[str, ...] = to_tuple(series_name) self.window = parse_time_arg(window) if isinstance(window, str) else window if strides == [None]: self.stride = None else: self.stride = [ parse_time_arg(s) if isinstance(s, str) else s for s in strides ] # Verify whether window and stride are either both sequence or time based dtype_set = set( AttributeParser.determine_type(v) for v in [self.window] + to_list(self.stride) ).difference([DataType.UNDEFINED]) if len(dtype_set) > 1: raise TypeError( f"a combination of window ({self.window} type={type(self.window)}) and" f" stride ({self.stride}) is not supported!" ) # Order of if statements is important (as FuncWrapper also is a Callable)! if isinstance(function, FuncWrapper): self.function: FuncWrapper = function elif isinstance(function, Callable): # type: ignore[arg-type] self.function: FuncWrapper = FuncWrapper(function) # type: ignore[no-redef] else: raise TypeError( "Expected feature function to be `Callable` or `FuncWrapper` but is a" f" {type(function)}." ) # Construct a function-string f_name = str(self.function) self._func_str: str = f"{self.__class__.__name__} - func: {f_name}" self._freeze() def get_required_series(self) -> List[str]: """Return all required series names for this feature descriptor. Return the list of series names that are required in order to execute the feature function. Returns ------- List[str] List of all the required series names. """ return list(set(self.series_name)) def get_nb_output_features(self) -> int: """Return the number of output features of this feature descriptor. Returns ------- int Number of output features. """ return len(self.function.output_names) def __repr__(self) -> str: """Representation string of Feature.""" return ( f"{self.__class__.__name__}({self.series_name}, {self.window}, " f"{self.stride})" )A FeatureDescriptor object, containing all feature information.

Parameters

function:Union[FuncWrapper, Callable]-

The function that calculates the feature(s). The prototype of the function should match:

function(*series: Union[np.array, pd.Series]) -> Union[Any, List[Any]]Note that when the input type is

pd.Series, the function should be wrapped in aFuncWrapperwithinput_type=pd.Series. series_name:Union[str, Tuple[str, …]]-

The names of the series on which the feature function should be applied. This argument should match the

functionits input;- If

series_nameis a string (or tuple of a single string), thenfunctionshould require just one series as input. - If

series_nameis a tuple of strings, thenfunctionshould requirelen(tuple)series as input and in exactly the same order

- If

window:Union[float, str, pd.Timedelta], optional-

The window size. By default None. This argument supports multiple types:

- If None, the

segment_start_idxsandsegment_end_idxswill need to be passed. - If the type is an

floator anint, its value represents the series- its window range when a non time-indexed series is passed.

- its window in number of samples, when a time-indexed series is

passed (must then be and

int)

- If the window's type is a

pd.Timedelta, the window size represents the window-time-range. The passed data must have a time-index. - If a

str, it must represents a window-time-range-string. The passed data must have a time-index.

Note

- When the

segment_start_idxsandsegment_end_idxsare both passed to the.calculate()method, this window argument is ignored. Note that this is the only case when it is allowed to pass None for the window argument. - When the window argument is None, than the stride argument should be None as well (as it makes no sense to pass a stride value when the window is None).

- If None, the

stride:Union[float, str, pd.Timedelta, List[Union[float, str, pd.Timedelta]]], optional-

The stride size(s). By default None. This argument supports multiple types:

- If None, the stride will need to be passed to

.calculate(). - If the type is an

floator anint, its value represents the series- its stride range when a non time-indexed series is passed.

- the stride in number of samples, when a time-indexed series

is passed (must then be and

int)

- If the stride's type is a

pd.Timedelta, the stride size represents the stride-time delta. The passed data must have a time-index. -

If a

str, it must represent a stride-time-delta-string. The passed data must have a time-index. -

If a

List[Union[float, str, pd.Timedelta]], then the set intersection,of the strides will be used (e.g., stride=[2,3] -> index: 0, 2, 3, 4, 6, 8, 9, …)

Note

- The stride argument of

.calculate()takes precedence over this value when set (i.e., not None value forstridepassed to thecalculatemethod). - The stride argument should be None when the window argument is None (as it makes no sense to pass a stride value when the window is None).

- If None, the stride will need to be passed to

Note

As described above, the

window-strideargument can be sample-based (when using time-index series and int based arguments), but we do not encourage using this fortime-indexedsequences. As we make the implicit assumption that the time-based data is sampled at a fixed frequency So only, if you're 100% sure that this is correct, you can safely use such arguments.Notes

- The

windowandstrideargument should be either both numeric orpd.Timedelta(depending on de index datatype) - whenstrideis not None. - For each

function-input(-series) -window- stride combination, one needs to create a distinctFeatureDescriptor. Hence it is more convenient to create aMultipleFeatureDescriptorswhenfunction-window-stridecombinations should be applied on various input-series (combinations). - When

functiontakes multiple series (i.e., arguments) as input, these are joined (based on the index) before applying the function. If the indexes of these series are not exactly the same, it might occur that not all series have exactly the same length! Hence, make sure that thefunctioncan deal with this! - For more information about the str-based time args, look into: pandas time delta

Raises

TypeError-

- Raised when the

functionis not an instance of Callable or FuncWrapper. - Raised when

windowandstrideare not of exactly the same type (whenstrideis not None).

- Raised when the

See Also

StridedRolling- As the window-stride sequence conversion takes place there.

Ancestors

- tsflex.utils.classes.FrozenClass

Methods

def get_required_series(self)-

Expand source code

def get_required_series(self) -> List[str]: """Return all required series names for this feature descriptor. Return the list of series names that are required in order to execute the feature function. Returns ------- List[str] List of all the required series names. """ return list(set(self.series_name))Return all required series names for this feature descriptor.

Return the list of series names that are required in order to execute the feature function.

Returns

List[str]- List of all the required series names.

def get_nb_output_features(self)-

Expand source code

def get_nb_output_features(self) -> int: """Return the number of output features of this feature descriptor. Returns ------- int Number of output features. """ return len(self.function.output_names)Return the number of output features of this feature descriptor.

Returns

int- Number of output features.

class MultipleFeatureDescriptors (functions, series_names, windows=None, strides=None)-

Expand source code

class MultipleFeatureDescriptors: """Create a MultipleFeatureDescriptors object. Create a list of features from **all** combinations of the given parameter lists. Total number of created `FeatureDescriptor`s will be: len(func_inputs)*len(functions)*len(windows)*len(strides). Parameters ---------- functions : Union[FuncWrapper, Callable, List[Union[FuncWrapper, Callable]]] The functions, can be either of both types (even in a single array). series_names : Union[str, Tuple[str, ...], List[str], List[Tuple[str, ...]]] The names of the series on which the feature function should be applied. * If `series_names` is a (list of) string (or tuple of a single string), then each `function` should require just one series as input. * If `series_names` is a (list of) tuple of strings, then each `function` should require `len(tuple)` series as input. A `list` implies that multiple multiple series (combinations) will be used to extract features from; \n * If `series_names` is a string or a tuple of strings, then `function` will be called only once for the series of this argument. * If `series_names` is a list of either strings or tuple of strings, then `function` will be called for each entry of this list. .. Note:: when passing a list as `series_names`, all items in this list should have the same type, i.e, either \n * all a str * or, all a tuple _with same length_.\n And perfectly match the func-input size. windows : Union[float, str, pd.Timedelta, List[Union[float, str, pd.Timedelta]]] All the window sizes. strides : Union[float, str, pd.Timedelta, None, List[Union[float, str, pd.Timedelta]]], optional All the strides. By default None. Note ---- The `windows` and `strides` argument should be either both numeric or ``pd.Timedelta`` (depending on de index datatype) - when `strides` is not None. """ def __init__( self, functions: Union[FuncWrapper, Callable, List[Union[FuncWrapper, Callable]]], series_names: Union[str, Tuple[str, ...], List[str], List[Tuple[str, ...]]], windows: Optional[ Union[float, str, pd.Timedelta, List[Union[float, str, pd.Timedelta]]] ] = None, strides: Optional[ Union[float, str, pd.Timedelta, List[Union[float, str, pd.Timedelta]]] ] = None, ): # Cast functions to FuncWrapper, this avoids creating multiple # FuncWrapper objects for the same function in the FeatureDescriptor def to_func_wrapper(f: Callable) -> FuncWrapper: return f if isinstance(f, FuncWrapper) else FuncWrapper(f) functions = [to_func_wrapper(f) for f in to_list(functions)] # Convert the series names to list of tuples series_names = [to_tuple(names) for names in to_list(series_names)] # Assert that function inputs (series) all have the same length assert all( len(series_names[0]) == len(series_name_tuple) for series_name_tuple in series_names ) # Convert the other types to list windows = to_list(windows) self.feature_descriptions: List[FeatureDescriptor] = [] # Iterate over all combinations combinations = [functions, series_names, windows] for function, series_name, window in itertools.product(*combinations): # type: ignore[call-overload] self.feature_descriptions.append( FeatureDescriptor(function, series_name, window, strides) )Create a MultipleFeatureDescriptors object.

Create a list of features from all combinations of the given parameter lists. Total number of created

FeatureDescriptors will be:len(func_inputs)*len(functions)*len(windows)*len(strides).Parameters

functions:Union[FuncWrapper, Callable, List[Union[FuncWrapper, Callable]]]- The functions, can be either of both types (even in a single array).

series_names:Union[str, Tuple[str, …], List[str], List[Tuple[str, …]]]-

The names of the series on which the feature function should be applied.

- If

series_namesis a (list of) string (or tuple of a single string), then eachfunctionshould require just one series as input. - If

series_namesis a (list of) tuple of strings, then eachfunctionshould requirelen(tuple)series as input.

A

listimplies that multiple multiple series (combinations) will be used to extract features from;- If

series_namesis a string or a tuple of strings, thenfunctionwill be called only once for the series of this argument. - If

series_namesis a list of either strings or tuple of strings, thenfunctionwill be called for each entry of this list.

Note

when passing a list as

series_names, all items in this list should have the same type, i.e, either- all a str

- or, all a tuple with same length.

And perfectly match the func-input size.

- If

windows:Union[float, str, pd.Timedelta, List[Union[float, str, pd.Timedelta]]]- All the window sizes.

strides:Union[float, str, pd.Timedelta, None, List[Union[float, str, pd.Timedelta]]], optional- All the strides. By default None.

Note

The

windowsandstridesargument should be either both numeric orpd.Timedelta(depending on de index datatype) - whenstridesis not None. class FeatureCollection (feature_descriptors=None)-

Expand source code